On this page

1. ItemReaders and ItemWriters

All batch processing can be described in its most simple form as reading in large amounts of data, performing some type of calculation or transformation, and writing the result out. Spring Batch provides three key interfaces to help perform bulk reading and writing: ItemReader, ItemProcessor, and ItemWriter.

1.1. ItemReader

Although a simple concept, an ItemReader is the means for providing data from many different types of input. The most general examples include:

Flat File: Flat-file item readers read lines of data from a flat file that typically describes records with fields of data defined by fixed positions in the file or delimited by some special character (such as a comma).

XML: XML

ItemReadersprocess XML independently of technologies used for parsing, mapping and validating objects. Input data allows for the validation of an XML file against an XSD schema.Database: A database resource is accessed to return resultsets which can be mapped to objects for processing. The default SQL

ItemReaderimplementations invoke aRowMapperto return objects, keep track of the current row if restart is required, store basic statistics, and provide some transaction enhancements that are explained later.

There are many more possibilities, but we focus on the basic ones for this chapter. A complete list of all available ItemReader implementations can be found in Appendix A.

ItemReader is a basic interface for generic input operations, as shown in the following interface definition:

public interface ItemReader<T> {

T read() throws Exception, UnexpectedInputException, ParseException, NonTransientResourceException;

}The read method defines the most essential contract of the ItemReader. Calling it returns one item or null if no more items are left. An item might represent a line in a file, a row in a database, or an element in an XML file. It is generally expected that these are mapped to a usable domain object (such as Trade, Foo, or others), but there is no requirement in the contract to do so.

It is expected that implementations of the ItemReader interface are forward only. However, if the underlying resource is transactional (such as a JMS queue) then calling read may return the same logical item on subsequent calls in a rollback scenario. It is also worth noting that a lack of items to process by an ItemReader does not cause an exception to be thrown. For example, a database ItemReader that is configured with a query that returns 0 results returns null on the first invocation of read.

1.2. ItemWriter

ItemWriter is similar in functionality to an ItemReader but with inverse operations. Resources still need to be located, opened, and closed but they differ in that an ItemWriter writes out, rather than reading in. In the case of databases or queues, these operations may be inserts, updates, or sends. The format of the serialization of the output is specific to each batch job.

As with ItemReader, ItemWriter is a fairly generic interface, as shown in the following interface definition:

public interface ItemWriter<T> {

void write(List<? extends T> items) throws Exception;

}As with read on ItemReader, write provides the basic contract of ItemWriter. It attempts to write out the list of items passed in as long as it is open. Because it is generally expected that items are 'batched' together into a chunk and then output, the interface accepts a list of items, rather than an item by itself. After writing out the list, any flushing that may be necessary can be performed before returning from the write method. For example, if writing to a Hibernate DAO, multiple calls to write can be made, one for each item. The writer can then call flush on the hibernate session before returning.

1.3. ItemProcessor

The ItemReader and ItemWriter interfaces are both very useful for their specific tasks, but what if you want to insert business logic before writing? One option for both reading and writing is to use the composite pattern: Create an ItemWriter that contains another ItemWriter or an ItemReader that contains another ItemReader. The following code shows an example:

public class CompositeItemWriter<T> implements ItemWriter<T> {

ItemWriter<T> itemWriter;

public CompositeItemWriter(ItemWriter<T> itemWriter) {

this.itemWriter = itemWriter;

}

public void write(List<? extends T> items) throws Exception {

//Add business logic here

itemWriter.write(items);

}

public void setDelegate(ItemWriter<T> itemWriter){

this.itemWriter = itemWriter;

}

}The preceding class contains another ItemWriter to which it delegates after having provided some business logic. This pattern could easily be used for an ItemReader as well, perhaps to obtain more reference data based upon the input that was provided by the main ItemReader. It is also useful if you need to control the call to write yourself. However, if you only want to 'transform' the item passed in for writing before it is actually written, you need not write yourself. You can just modify the item. For this scenario, Spring Batch provides the ItemProcessor interface, as shown in the following interface definition:

public interface ItemProcessor<I, O> {

O process(I item) throws Exception;

}An ItemProcessor is simple. Given one object, transform it and return another. The provided object may or may not be of the same type. The point is that business logic may be applied within the process, and it is completely up to the developer to create that logic. An ItemProcessor can be wired directly into a step. For example, assume an ItemReader provides a class of type Foo and that it needs to be converted to type Bar before being written out. The following example shows an ItemProcessor that performs the conversion:

public class Foo {}

public class Bar {

public Bar(Foo foo) {}

}

public class FooProcessor implements ItemProcessor<Foo,Bar>{

public Bar process(Foo foo) throws Exception {

//Perform simple transformation, convert a Foo to a Bar

return new Bar(foo);

}

}

public class BarWriter implements ItemWriter<Bar>{

public void write(List<? extends Bar> bars) throws Exception {

//write bars

}

}In the preceding example, there is a class Foo, a class Bar, and a class FooProcessor that adheres to the ItemProcessor interface. The transformation is simple, but any type of transformation could be done here. The BarWriter writes Bar objects, throwing an exception if any other type is provided. Similarly, the FooProcessor throws an exception if anything but a Foo is provided. The FooProcessor can then be injected into a Step, as shown in the following example:

<job id="ioSampleJob">

<step name="step1">

<tasklet>

<chunk reader="fooReader" processor="fooProcessor" writer="barWriter"

commit-interval="2"/>

</tasklet>

</step>

</job>@Bean

public Job ioSampleJob() {

return this.jobBuilderFactory.get("ioSampleJOb")

.start(step1())

.end()

.build();

}

@Bean

public Step step1() {

return this.stepBuilderFactory.get("step1")

.<String, String>chunk(2)

.reader(fooReader())

.processor(fooProcessor())

.writer(barWriter())

.build();

}1.3.1. Chaining ItemProcessors

Performing a single transformation is useful in many scenarios, but what if you want to 'chain' together multiple ItemProcessor implementations? This can be accomplished using the composite pattern mentioned previously. To update the previous, single transformation, example, Foo is transformed to Bar, which is transformed to Foobar and written out, as shown in the following example:

public class Foo {}

public class Bar {

public Bar(Foo foo) {}

}

public class Foobar {

public Foobar(Bar bar) {}

}

public class FooProcessor implements ItemProcessor<Foo,Bar>{

public Bar process(Foo foo) throws Exception {

//Perform simple transformation, convert a Foo to a Bar

return new Bar(foo);

}

}

public class BarProcessor implements ItemProcessor<Bar,Foobar>{

public Foobar process(Bar bar) throws Exception {

return new Foobar(bar);

}

}

public class FoobarWriter implements ItemWriter<Foobar>{

public void write(List<? extends Foobar> items) throws Exception {

//write items

}

}A FooProcessor and a BarProcessor can be 'chained' together to give the resultant Foobar, as shown in the following example:

CompositeItemProcessor<Foo,Foobar> compositeProcessor =

new CompositeItemProcessor<Foo,Foobar>();

List itemProcessors = new ArrayList();

itemProcessors.add(new FooTransformer());

itemProcessors.add(new BarTransformer());

compositeProcessor.setDelegates(itemProcessors);Just as with the previous example, the composite processor can be configured into the Step:

<job id="ioSampleJob">

<step name="step1">

<tasklet>

<chunk reader="fooReader" processor="compositeItemProcessor" writer="foobarWriter"

commit-interval="2"/>

</tasklet>

</step>

</job>

<bean id="compositeItemProcessor"

class="org.springframework.batch.item.support.CompositeItemProcessor">

<property name="delegates">

<list>

<bean class="..FooProcessor" />

<bean class="..BarProcessor" />

</list>

</property>

</bean>@Bean

public Job ioSampleJob() {

return this.jobBuilderFactory.get("ioSampleJob")

.start(step1())

.end()

.build();

}

@Bean

public Step step1() {

return this.stepBuilderFactory.get("step1")

.<String, String>chunk(2)

.reader(fooReader())

.processor(compositeProcessor())

.writer(foobarWriter())

.build();

}

@Bean

public CompositeItemProcessor compositeProcessor() {

List<ItemProcessor> delegates = new ArrayList<>(2);

delegates.add(new FooProcessor());

delegates.add(new BarProcessor());

CompositeItemProcessor processor = new CompositeItemProcessor();

processor.setDelegates(delegates);

return processor;

}1.3.2. Filtering Records

One typical use for an item processor is to filter out records before they are passed to the ItemWriter. Filtering is an action distinct from skipping. Skipping indicates that a record is invalid, while filtering simply indicates that a record should not be written.

For example, consider a batch job that reads a file containing three different types of records: records to insert, records to update, and records to delete. If record deletion is not supported by the system, then we would not want to send any "delete" records to the ItemWriter. But, since these records are not actually bad records, we would want to filter them out rather than skip them. As a result, the ItemWriter would receive only "insert" and "update" records.

To filter a record, you can return null from the ItemProcessor. The framework detects that the result is null and avoids adding that item to the list of records delivered to the ItemWriter. As usual, an exception thrown from the ItemProcessor results in a skip.

1.3.3. Fault Tolerance

When a chunk is rolled back, items that have been cached during reading may be reprocessed. If a step is configured to be fault tolerant (typically by using skip or retry processing), any ItemProcessor used should be implemented in a way that is idempotent. Typically that would consist of performing no changes on the input item for the ItemProcessor and only updating the instance that is the result.

1.4. ItemStream

Both ItemReaders and ItemWriters serve their individual purposes well, but there is a common concern among both of them that necessitates another interface. In general, as part of the scope of a batch job, readers and writers need to be opened, closed, and require a mechanism for persisting state. The ItemStream interface serves that purpose, as shown in the following example:

public interface ItemStream {

void open(ExecutionContext executionContext) throws ItemStreamException;

void update(ExecutionContext executionContext) throws ItemStreamException;

void close() throws ItemStreamException;

}Before describing each method, we should mention the ExecutionContext. Clients of an ItemReader that also implement ItemStream should call open before any calls to read, in order to open any resources such as files or to obtain connections. A similar restriction applies to an ItemWriter that implements ItemStream. As mentioned in Chapter 2, if expected data is found in the ExecutionContext, it may be used to start the ItemReader or ItemWriter at a location other than its initial state. Conversely, close is called to ensure that any resources allocated during open are released safely. update is called primarily to ensure that any state currently being held is loaded into the provided ExecutionContext. This method is called before committing, to ensure that the current state is persisted in the database before commit.

In the special case where the client of an ItemStream is a Step (from the Spring Batch Core), an ExecutionContext is created for each StepExecution to allow users to store the state of a particular execution, with the expectation that it is returned if the same JobInstance is started again. For those familiar with Quartz, the semantics are very similar to a Quartz JobDataMap.

1.5. The Delegate Pattern and Registering with the Step

Note that the CompositeItemWriter is an example of the delegation pattern, which is common in Spring Batch. The delegates themselves might implement callback interfaces, such as StepListener. If they do and if they are being used in conjunction with Spring Batch Core as part of a Step in a Job, then they almost certainly need to be registered manually with the Step. A reader, writer, or processor that is directly wired into the Step gets registered automatically if it implements ItemStream or a StepListener interface. However, because the delegates are not known to the Step, they need to be injected as listeners or streams (or both if appropriate), as shown in the following example:

<job id="ioSampleJob">

<step name="step1">

<tasklet>

<chunk reader="fooReader" processor="fooProcessor" writer="compositeItemWriter"

commit-interval="2">

<streams>

<stream ref="barWriter" />

</streams>

</chunk>

</tasklet>

</step>

</job>

<bean id="compositeItemWriter" class="...CustomCompositeItemWriter">

<property name="delegate" ref="barWriter" />

</bean>

<bean id="barWriter" class="...BarWriter" />@Bean

public Job ioSampleJob() {

return this.jobBuilderFactory.get("ioSampleJob")

.start(step1())

.end()

.build();

}

@Bean

public Step step1() {

return this.stepBuilderFactory.get("step1")

.<String, String>chunk(2)

.reader(fooReader())

.processor(fooProcessor())

.writer(compositeItemWriter())

.stream(barWriter())

.build();

}

@Bean

public CustomCompositeItemWriter compositeItemWriter() {

CustomCompositeItemWriter writer = new CustomCompositeItemWriter();

writer.setDelegate(barWriter());

return writer;

}

@Bean

public BarWriter barWriter() {

return new BarWriter();

}1.6. Flat Files

One of the most common mechanisms for interchanging bulk data has always been the flat file. Unlike XML, which has an agreed upon standard for defining how it is structured (XSD), anyone reading a flat file must understand ahead of time exactly how the file is structured. In general, all flat files fall into two types: delimited and fixed length. Delimited files are those in which fields are separated by a delimiter, such as a comma. Fixed Length files have fields that are a set length.

1.6.1. The FieldSet

When working with flat files in Spring Batch, regardless of whether it is for input or output, one of the most important classes is the FieldSet. Many architectures and libraries contain abstractions for helping you read in from a file, but they usually return a String or an array of String objects. This really only gets you halfway there. A FieldSet is Spring Batch’s abstraction for enabling the binding of fields from a file resource. It allows developers to work with file input in much the same way as they would work with database input. A FieldSet is conceptually similar to a JDBC ResultSet. A FieldSet requires only one argument: a String array of tokens. Optionally, you can also configure the names of the fields so that the fields may be accessed either by index or name as patterned after ResultSet, as shown in the following example:

String[] tokens = new String[]{"foo", "1", "true"};

FieldSet fs = new DefaultFieldSet(tokens);

String name = fs.readString(0);

int value = fs.readInt(1);

boolean booleanValue = fs.readBoolean(2);There are many more options on the FieldSet interface, such as Date, long, BigDecimal, and so on. The biggest advantage of the FieldSet is that it provides consistent parsing of flat file input. Rather than each batch job parsing differently in potentially unexpected ways, it can be consistent, both when handling errors caused by a format exception, or when doing simple data conversions.

1.6.2. FlatFileItemReader

A flat file is any type of file that contains at most two-dimensional (tabular) data. Reading flat files in the Spring Batch framework is facilitated by the class called FlatFileItemReader, which provides basic functionality for reading and parsing flat files. The two most important required dependencies of FlatFileItemReader are Resource and LineMapper. The LineMapper interface is explored more in the next sections. The resource property represents a Spring Core Resource. Documentation explaining how to create beans of this type can be found in Spring Framework, Chapter 5. Resources . Therefore, this guide does not go into the details of creating Resource objects beyond showing the following simple example:

Resource resource = new FileSystemResource("resources/trades.csv");In complex batch environments, the directory structures are often managed by the EAI infrastructure, where drop zones for external interfaces are established for moving files from FTP locations to batch processing locations and vice versa. File moving utilities are beyond the scope of the Spring Batch architecture, but it is not unusual for batch job streams to include file moving utilities as steps in the job stream. The batch architecture only needs to know how to locate the files to be processed. Spring Batch begins the process of feeding the data into the pipe from this starting point. However, Spring Integration provides many of these types of services.

The other properties in FlatFileItemReader let you further specify how your data is interpreted, as described in the following table:

| Property | Type | Description |

|---|---|---|

comments |

String[] |

Specifies line prefixes that indicate comment rows. |

encoding |

String |

Specifies what text encoding to use. The default is the value of |

lineMapper |

|

Converts a |

linesToSkip |

int |

Number of lines to ignore at the top of the file. |

recordSeparatorPolicy |

RecordSeparatorPolicy |

Used to determine where the line endings are and do things like continue over a line ending if inside a quoted string. |

resource |

|

The resource from which to read. |

skippedLinesCallback |

LineCallbackHandler |

Interface that passes the raw line content of the lines in the file to be skipped. If |

strict |

boolean |

In strict mode, the reader throws an exception on |

LineMapper

As with RowMapper, which takes a low-level construct such as ResultSet and returns an Object, flat file processing requires the same construct to convert a String line into an Object, as shown in the following interface definition:

public interface LineMapper<T> {

T mapLine(String line, int lineNumber) throws Exception;

}The basic contract is that, given the current line and the line number with which it is associated, the mapper should return a resulting domain object. This is similar to RowMapper, in that each line is associated with its line number, just as each row in a ResultSet is tied to its row number. This allows the line number to be tied to the resulting domain object for identity comparison or for more informative logging. However, unlike RowMapper, the LineMapper is given a raw line which, as discussed above, only gets you halfway there. The line must be tokenized into a FieldSet, which can then be mapped to an object, as described later in this document.

LineTokenizer

An abstraction for turning a line of input into a FieldSet is necessary because there can be many formats of flat file data that need to be converted to a FieldSet. In Spring Batch, this interface is the LineTokenizer:

public interface LineTokenizer {

FieldSet tokenize(String line);

}The contract of a LineTokenizer is such that, given a line of input (in theory the String could encompass more than one line), a FieldSet representing the line is returned. This FieldSet can then be passed to a FieldSetMapper. Spring Batch contains the following LineTokenizer implementations:

DelimitedLineTokenizer: Used for files where fields in a record are separated by a delimiter. The most common delimiter is a comma, but pipes or semicolons are often used as well.FixedLengthTokenizer: Used for files where fields in a record are each a "fixed width". The width of each field must be defined for each record type.PatternMatchingCompositeLineTokenizer: Determines whichLineTokenizeramong a list of tokenizers should be used on a particular line by checking against a pattern.

FieldSetMapper

The FieldSetMapper interface defines a single method, mapFieldSet, which takes a FieldSet object and maps its contents to an object. This object may be a custom DTO, a domain object, or an array, depending on the needs of the job. The FieldSetMapper is used in conjunction with the LineTokenizer to translate a line of data from a resource into an object of the desired type, as shown in the following interface definition:

public interface FieldSetMapper<T> {

T mapFieldSet(FieldSet fieldSet) throws BindException;

}The pattern used is the same as the RowMapper used by JdbcTemplate.

DefaultLineMapper

Now that the basic interfaces for reading in flat files have been defined, it becomes clear that three basic steps are required:

Read one line from the file.

Pass the

Stringline into theLineTokenizer#tokenize()method to retrieve aFieldSet.Pass the

FieldSetreturned from tokenizing to aFieldSetMapper, returning the result from theItemReader#read()method.

The two interfaces described above represent two separate tasks: converting a line into a FieldSet and mapping a FieldSet to a domain object. Because the input of a LineTokenizer matches the input of the LineMapper (a line), and the output of a FieldSetMapper matches the output of the LineMapper, a default implementation that uses both a LineTokenizer and a FieldSetMapper is provided. The DefaultLineMapper, shown in the following class definition, represents the behavior most users need:

public class DefaultLineMapper<T> implements LineMapper<>, InitializingBean {

private LineTokenizer tokenizer;

private FieldSetMapper<T> fieldSetMapper;

public T mapLine(String line, int lineNumber) throws Exception {

return fieldSetMapper.mapFieldSet(tokenizer.tokenize(line));

}

public void setLineTokenizer(LineTokenizer tokenizer) {

this.tokenizer = tokenizer;

}

public void setFieldSetMapper(FieldSetMapper<T> fieldSetMapper) {

this.fieldSetMapper = fieldSetMapper;

}

}The above functionality is provided in a default implementation, rather than being built into the reader itself (as was done in previous versions of the framework) to allow users greater flexibility in controlling the parsing process, especially if access to the raw line is needed.

Simple Delimited File Reading Example

The following example illustrates how to read a flat file with an actual domain scenario. This particular batch job reads in football players from the following file:

ID,lastName,firstName,position,birthYear,debutYear

"AbduKa00,Abdul-Jabbar,Karim,rb,1974,1996",

"AbduRa00,Abdullah,Rabih,rb,1975,1999",

"AberWa00,Abercrombie,Walter,rb,1959,1982",

"AbraDa00,Abramowicz,Danny,wr,1945,1967",

"AdamBo00,Adams,Bob,te,1946,1969",

"AdamCh00,Adams,Charlie,wr,1979,2003"The contents of this file are mapped to the following Player domain object:

public class Player implements Serializable {

private String ID;

private String lastName;

private String firstName;

private String position;

private int birthYear;

private int debutYear;

public String toString() {

return "PLAYER:ID=" + ID + ",Last Name=" + lastName +

",First Name=" + firstName + ",Position=" + position +

",Birth Year=" + birthYear + ",DebutYear=" +

debutYear;

}

// setters and getters...

}To map a FieldSet into a Player object, a FieldSetMapper that returns players needs to be defined, as shown in the following example:

protected static class PlayerFieldSetMapper implements FieldSetMapper<Player> {

public Player mapFieldSet(FieldSet fieldSet) {

Player player = new Player();

player.setID(fieldSet.readString(0));

player.setLastName(fieldSet.readString(1));

player.setFirstName(fieldSet.readString(2));

player.setPosition(fieldSet.readString(3));

player.setBirthYear(fieldSet.readInt(4));

player.setDebutYear(fieldSet.readInt(5));

return player;

}

}The file can then be read by correctly constructing a FlatFileItemReader and calling read, as shown in the following example:

FlatFileItemReader<Player> itemReader = new FlatFileItemReader<Player>();

itemReader.setResource(new FileSystemResource("resources/players.csv"));

//DelimitedLineTokenizer defaults to comma as its delimiter

DefaultLineMapper<Player> lineMapper = new DefaultLineMapper<Player>();

lineMapper.setLineTokenizer(new DelimitedLineTokenizer());

lineMapper.setFieldSetMapper(new PlayerFieldSetMapper());

itemReader.setLineMapper(lineMapper);

itemReader.open(new ExecutionContext());

Player player = itemReader.read();Each call to read returns a new Player object from each line in the file. When the end of the file is reached, null is returned.

Mapping Fields by Name

There is one additional piece of functionality that is allowed by both DelimitedLineTokenizer and FixedLengthTokenizer and that is similar in function to a JDBC ResultSet. The names of the fields can be injected into either of these LineTokenizer implementations to increase the readability of the mapping function. First, the column names of all fields in the flat file are injected into the tokenizer, as shown in the following example:

tokenizer.setNames(new String[] {"ID", "lastName","firstName","position","birthYear","debutYear"});A FieldSetMapper can use this information as follows:

public class PlayerMapper implements FieldSetMapper<Player> {

public Player mapFieldSet(FieldSet fs) {

if(fs == null){

return null;

}

Player player = new Player();

player.setID(fs.readString("ID"));

player.setLastName(fs.readString("lastName"));

player.setFirstName(fs.readString("firstName"));

player.setPosition(fs.readString("position"));

player.setDebutYear(fs.readInt("debutYear"));

player.setBirthYear(fs.readInt("birthYear"));

return player;

}

}Automapping FieldSets to Domain Objects

For many, having to write a specific FieldSetMapper is equally as cumbersome as writing a specific RowMapper for a JdbcTemplate. Spring Batch makes this easier by providing a FieldSetMapper that automatically maps fields by matching a field name with a setter on the object using the JavaBean specification. Again using the football example, the BeanWrapperFieldSetMapper configuration looks like the following snippet:

<bean id="fieldSetMapper"

class="org.springframework.batch.item.file.mapping.BeanWrapperFieldSetMapper">

<property name="prototypeBeanName" value="player" />

</bean>

<bean id="player"

class="org.springframework.batch.sample.domain.Player"

scope="prototype" />@Bean

public FieldSetMapper fieldSetMapper() {

BeanWrapperFieldSetMapper fieldSetMapper = new BeanWrapperFieldSetMapper();

fieldSetMapper.setPrototypeBeanName("player");

return fieldSetMapper;

}

@Bean

@Scope("prototype")

public Player player() {

return new Player();

}For each entry in the FieldSet, the mapper looks for a corresponding setter on a new instance of the Player object (for this reason, prototype scope is required) in the same way the Spring container looks for setters matching a property name. Each available field in the FieldSet is mapped, and the resultant Player object is returned, with no code required.

Fixed Length File Formats

So far, only delimited files have been discussed in much detail. However, they represent only half of the file reading picture. Many organizations that use flat files use fixed length formats. An example fixed length file follows:

UK21341EAH4121131.11customer1

UK21341EAH4221232.11customer2

UK21341EAH4321333.11customer3

UK21341EAH4421434.11customer4

UK21341EAH4521535.11customer5While this looks like one large field, it actually represent 4 distinct fields:

ISIN: Unique identifier for the item being ordered - 12 characters long.

Quantity: Number of the item being ordered - 3 characters long.

Price: Price of the item - 5 characters long.

Customer: ID of the customer ordering the item - 9 characters long.

When configuring the FixedLengthLineTokenizer, each of these lengths must be provided in the form of ranges, as shown in the following example:

<bean id="fixedLengthLineTokenizer"

class="org.springframework.batch.io.file.transform.FixedLengthTokenizer">

<property name="names" value="ISIN,Quantity,Price,Customer" />

<property name="columns" value="1-12, 13-15, 16-20, 21-29" />

</bean>Because the FixedLengthLineTokenizer uses the same LineTokenizer interface as discussed above, it returns the same FieldSet as if a delimiter had been used. This allows the same approaches to be used in handling its output, such as using the BeanWrapperFieldSetMapper.

|

Supporting the above syntax for ranges requires that a specialized property editor, |

@Bean

public FixedLengthTokenizer fixedLengthTokenizer() {

FixedLengthTokenizer tokenizer = new FixedLengthTokenizer();

tokenizer.setNames("ISIN", "Quantity", "Price", "Customer");

tokenizer.setColumns(new Range(1-12),

new Range(13-15),

new Range(16-20),

new Range(21-29));

return tokenizer;

}Because the FixedLengthLineTokenizer uses the same LineTokenizer interface as discussed above, it returns the same FieldSet as if a delimiter had been used. This lets the same approaches be used in handling its output, such as using the BeanWrapperFieldSetMapper.

Multiple Record Types within a Single File

All of the file reading examples up to this point have all made a key assumption for simplicity’s sake: all of the records in a file have the same format. However, this may not always be the case. It is very common that a file might have records with different formats that need to be tokenized differently and mapped to different objects. The following excerpt from a file illustrates this:

USER;Smith;Peter;;T;20014539;F

LINEA;1044391041ABC037.49G201XX1383.12H

LINEB;2134776319DEF422.99M005LIIn this file we have three types of records, "USER", "LINEA", and "LINEB". A "USER" line corresponds to a User object. "LINEA" and "LINEB" both correspond to Line objects, though a "LINEA" has more information than a "LINEB".

The ItemReader reads each line individually, but we must specify different LineTokenizer and FieldSetMapper objects so that the ItemWriter receives the correct items. The PatternMatchingCompositeLineMapper makes this easy by allowing maps of patterns to LineTokenizer instances and patterns to FieldSetMapper instances to be configured, as shown in the following example:

<bean id="orderFileLineMapper"

class="org.spr...PatternMatchingCompositeLineMapper">

<property name="tokenizers">

<map>

<entry key="USER*" value-ref="userTokenizer" />

<entry key="LINEA*" value-ref="lineATokenizer" />

<entry key="LINEB*" value-ref="lineBTokenizer" />

</map>

</property>

<property name="fieldSetMappers">

<map>

<entry key="USER*" value-ref="userFieldSetMapper" />

<entry key="LINE*" value-ref="lineFieldSetMapper" />

</map>

</property>

</bean>@Bean

public PatternMatchingCompositeLineMapper orderFileLineMapper() {

PatternMatchingCompositeLineMapper lineMapper =

new PatternMatchingCompositeLineMapper();

Map<String, LineTokenizer> tokenizers = new HashMap<>(3);

tokenizers.put("USER*", userTokenizer());

tokenizers.put("LINEA*", lineATokenizer());

tokenizers.put("LINEB*", lineBTokenizer());

lineMapper.setTokenizers(tokenizers);

Map<String, FieldSetMapper> mappers = new HashMap<>(2);

mappers.put("USER*", userFieldSetMapper());

mappers.put("LINE*", lineFieldSetMapper());

lineMapper.setFieldSetMappers(mappers);

return lineMapper;

}In this example, "LINEA" and "LINEB" have separate LineTokenizer instances, but they both use the same FieldSetMapper.

The PatternMatchingCompositeLineMapper uses the PatternMatcher#match method in order to select the correct delegate for each line. The PatternMatcher allows for two wildcard characters with special meaning: the question mark ("?") matches exactly one character, while the asterisk ("*") matches zero or more characters. Note that, in the preceding configuration, all patterns end with an asterisk, making them effectively prefixes to lines. The PatternMatcher always matches the most specific pattern possible, regardless of the order in the configuration. So if "LINE*" and "LINEA*" were both listed as patterns, "LINEA" would match pattern "LINEA*", while "LINEB" would match pattern "LINE*". Additionally, a single asterisk ("*") can serve as a default by matching any line not matched by any other pattern, as shown in the following example.

<entry key="*" value-ref="defaultLineTokenizer" />...

tokenizers.put("*", defaultLineTokenizer());

...There is also a PatternMatchingCompositeLineTokenizer that can be used for tokenization alone.

It is also common for a flat file to contain records that each span multiple lines. To handle this situation, a more complex strategy is required. A demonstration of this common pattern can be found in the multiLineRecords sample.

Exception Handling in Flat Files

There are many scenarios when tokenizing a line may cause exceptions to be thrown. Many flat files are imperfect and contain incorrectly formatted records. Many users choose to skip these erroneous lines while logging the issue, the original line, and the line number. These logs can later be inspected manually or by another batch job. For this reason, Spring Batch provides a hierarchy of exceptions for handling parse exceptions: FlatFileParseException and FlatFileFormatException. FlatFileParseException is thrown by the FlatFileItemReader when any errors are encountered while trying to read a file. FlatFileFormatException is thrown by implementations of the LineTokenizer interface and indicates a more specific error encountered while tokenizing.

IncorrectTokenCountException

Both DelimitedLineTokenizer and FixedLengthLineTokenizer have the ability to specify column names that can be used for creating a FieldSet. However, if the number of column names does not match the number of columns found while tokenizing a line, the FieldSet cannot be created, and an IncorrectTokenCountException is thrown, which contains the number of tokens encountered, and the number expected, as shown in the following example:

tokenizer.setNames(new String[] {"A", "B", "C", "D"});

try {

tokenizer.tokenize("a,b,c");

}

catch(IncorrectTokenCountException e){

assertEquals(4, e.getExpectedCount());

assertEquals(3, e.getActualCount());

}Because the tokenizer was configured with 4 column names but only 3 tokens were found in the file, an IncorrectTokenCountException was thrown.

IncorrectLineLengthException

Files formatted in a fixed-length format have additional requirements when parsing because, unlike a delimited format, each column must strictly adhere to its predefined width. If the total line length does not equal the widest value of this column, an exception is thrown, as shown in the following example:

tokenizer.setColumns(new Range[] { new Range(1, 5),

new Range(6, 10),

new Range(11, 15) });

try {

tokenizer.tokenize("12345");

fail("Expected IncorrectLineLengthException");

}

catch (IncorrectLineLengthException ex) {

assertEquals(15, ex.getExpectedLength());

assertEquals(5, ex.getActualLength());

}The configured ranges for the tokenizer above are: 1-5, 6-10, and 11-15. Consequently, the total length of the line is 15. However, in the preceding example, a line of length 5 was passed in, causing an IncorrectLineLengthException to be thrown. Throwing an exception here rather than only mapping the first column allows the processing of the line to fail earlier and with more information than it would contain if it failed while trying to read in column 2 in a FieldSetMapper. However, there are scenarios where the length of the line is not always constant. For this reason, validation of line length can be turned off via the 'strict' property, as shown in the following example:

tokenizer.setColumns(new Range[] { new Range(1, 5), new Range(6, 10) });

tokenizer.setStrict(false);

FieldSet tokens = tokenizer.tokenize("12345");

assertEquals("12345", tokens.readString(0));

assertEquals("", tokens.readString(1));The preceding example is almost identical to the one before it, except that tokenizer.setStrict(false) was called. This setting tells the tokenizer to not enforce line lengths when tokenizing the line. A FieldSet is now correctly created and returned. However, it contains only empty tokens for the remaining values.

1.6.3. FlatFileItemWriter

Writing out to flat files has the same problems and issues that reading in from a file must overcome. A step must be able to write either delimited or fixed length formats in a transactional manner.

LineAggregator

Just as the LineTokenizer interface is necessary to take an item and turn it into a String, file writing must have a way to aggregate multiple fields into a single string for writing to a file. In Spring Batch, this is the LineAggregator, shown in the following interface definition:

public interface LineAggregator<T> {

public String aggregate(T item);

}The LineAggregator is the logical opposite of LineTokenizer. LineTokenizer takes a String and returns a FieldSet, whereas LineAggregator takes an item and returns a String.

PassThroughLineAggregator

The most basic implementation of the LineAggregator interface is the PassThroughLineAggregator, which assumes that the object is already a string or that its string representation is acceptable for writing, as shown in the following code:

public class PassThroughLineAggregator<T> implements LineAggregator<T> {

public String aggregate(T item) {

return item.toString();

}

}The preceding implementation is useful if direct control of creating the string is required but the advantages of a FlatFileItemWriter, such as transaction and restart support, are necessary.

Simplified File Writing Example

Now that the LineAggregator interface and its most basic implementation, PassThroughLineAggregator, have been defined, the basic flow of writing can be explained:

The object to be written is passed to the

LineAggregatorin order to obtain aString.The returned

Stringis written to the configured file.

The following excerpt from the FlatFileItemWriter expresses this in code:

public void write(T item) throws Exception {

write(lineAggregator.aggregate(item) + LINE_SEPARATOR);

}A simple configuration might look like the following:

<bean id="itemWriter" class="org.spr...FlatFileItemWriter">

<property name="resource" value="file:target/test-outputs/output.txt" />

<property name="lineAggregator">

<bean class="org.spr...PassThroughLineAggregator"/>

</property>

</bean>@Bean

public FlatFileItemWriter itemWriter() {

return new FlatFileItemWriterBuilder<Foo>()

.name("itemWriter")

.resource(new FileSystemResource("target/test-outputs/output.txt"))

.lineAggregator(new PassThroughLineAggregator<>())

.build();

}FieldExtractor

The preceding example may be useful for the most basic uses of a writing to a file. However, most users of the FlatFileItemWriter have a domain object that needs to be written out and, thus, must be converted into a line. In file reading, the following was required:

Read one line from the file.

Pass the line into the

LineTokenizer#tokenize()method, in order to retrieve aFieldSet.Pass the

FieldSetreturned from tokenizing to aFieldSetMapper, returning the result from theItemReader#read()method.

File writing has similar but inverse steps:

Pass the item to be written to the writer.

Convert the fields on the item into an array.

Aggregate the resulting array into a line.

Because there is no way for the framework to know which fields from the object need to be written out, a FieldExtractor must be written to accomplish the task of turning the item into an array, as shown in the following interface definition:

public interface FieldExtractor<T> {

Object[] extract(T item);

}Implementations of the FieldExtractor interface should create an array from the fields of the provided object, which can then be written out with a delimiter between the elements or as part of a fixed-width line.

PassThroughFieldExtractor

There are many cases where a collection, such as an array, Collection, or FieldSet, needs to be written out. "Extracting" an array from one of these collection types is very straightforward. To do so, convert the collection to an array. Therefore, the PassThroughFieldExtractor should be used in this scenario. It should be noted that, if the object passed in is not a type of collection, then the PassThroughFieldExtractor returns an array containing solely the item to be extracted.

BeanWrapperFieldExtractor

As with the BeanWrapperFieldSetMapper described in the file reading section, it is often preferable to configure how to convert a domain object to an object array, rather than writing the conversion yourself. The BeanWrapperFieldExtractor provides this functionality, as shown in the following example:

BeanWrapperFieldExtractor<Name> extractor = new BeanWrapperFieldExtractor<Name>();

extractor.setNames(new String[] { "first", "last", "born" });

String first = "Alan";

String last = "Turing";

int born = 1912;

Name n = new Name(first, last, born);

Object[] values = extractor.extract(n);

assertEquals(first, values[0]);

assertEquals(last, values[1]);

assertEquals(born, values[2]);This extractor implementation has only one required property: the names of the fields to map. Just as the BeanWrapperFieldSetMapper needs field names to map fields on the FieldSet to setters on the provided object, the BeanWrapperFieldExtractor needs names to map to getters for creating an object array. It is worth noting that the order of the names determines the order of the fields within the array.

Delimited File Writing Example

The most basic flat file format is one in which all fields are separated by a delimiter. This can be accomplished using a DelimitedLineAggregator. The following example writes out a simple domain object that represents a credit to a customer account:

public class CustomerCredit {

private int id;

private String name;

private BigDecimal credit;

//getters and setters removed for clarity

}Because a domain object is being used, an implementation of the FieldExtractor interface must be provided, along with the delimiter to use, as shown in the following example:

<bean id="itemWriter" class="org.springframework.batch.item.file.FlatFileItemWriter">

<property name="resource" ref="outputResource" />

<property name="lineAggregator">

<bean class="org.spr...DelimitedLineAggregator">

<property name="delimiter" value=","/>

<property name="fieldExtractor">

<bean class="org.spr...BeanWrapperFieldExtractor">

<property name="names" value="name,credit"/>

</bean>

</property>

</bean>

</property>

</bean>@Bean

public FlatFileItemWriter<CustomerCredit> itemWriter(Resource outputResource) throws Exception {

BeanWrapperFieldExtractor<CustomerCredit> fieldExtractor = new BeanWrapperFieldExtractor<>();

fieldExtractor.setNames(new String[] {"name", "credit"});

fieldExtractor.afterPropertiesSet();

DelimitedLineAggregator<CustomerCredit> lineAggregator = new DelimitedLineAggregator<>();

lineAggregator.setDelimiter(",");

lineAggregator.setFieldExtractor(fieldExtractor);

return new FlatFileItemWriterBuilder<CustomerCredit>()

.name("customerCreditWriter")

.resource(outputResource)

.lineAggregator(lineAggregator)

.build();

}In the previous example, the BeanWrapperFieldExtractor described earlier in this chapter is used to turn the name and credit fields within CustomerCredit into an object array, which is then written out with commas between each field.

It is also possible to use the FlatFileItemWriterBuilder.DelimitedBuilder to automatically create the BeanWrapperFieldExtractor and DelimitedLineAggregator as shown in the following example:

@Bean

public FlatFileItemWriter<CustomerCredit> itemWriter(Resource outputResource) throws Exception {

return new FlatFileItemWriterBuilder<CustomerCredit>()

.name("customerCreditWriter")

.resource(outputResource)

.delimited()

.delimiter("|")

.names(new String[] {"name", "credit"})

.build();

}Fixed Width File Writing Example

Delimited is not the only type of flat file format. Many prefer to use a set width for each column to delineate between fields, which is usually referred to as 'fixed width'. Spring Batch supports this in file writing with the FormatterLineAggregator. Using the same CustomerCredit domain object described above, it can be configured as follows:

<bean id="itemWriter" class="org.springframework.batch.item.file.FlatFileItemWriter">

<property name="resource" ref="outputResource" />

<property name="lineAggregator">

<bean class="org.spr...FormatterLineAggregator">

<property name="fieldExtractor">

<bean class="org.spr...BeanWrapperFieldExtractor">

<property name="names" value="name,credit" />

</bean>

</property>

<property name="format" value="%-9s%-2.0f" />

</bean>

</property>

</bean>@Bean

public FlatFileItemWriter<CustomerCredit> itemWriter(Resource outputResource) throws Exception {

BeanWrapperFieldExtractor<CustomerCredit> fieldExtractor = new BeanWrapperFieldExtractor<>();

fieldExtractor.setNames(new String[] {"name", "credit"});

fieldExtractor.afterPropertiesSet();

FormatterLineAggregator<CustomerCredit> lineAggregator = new FormatterLineAggregator<>();

lineAggregator.setFormat("%-9s%-2.0f");

lineAggregator.setFieldExtractor(fieldExtractor);

return new FlatFileItemWriterBuilder<CustomerCredit>()

.name("customerCreditWriter")

.resource(outputResource)

.lineAggregator(lineAggregator)

.build();

}Most of the preceding example should look familiar. However, the value of the format property is new and is shown in the following element:

<property name="format" value="%-9s%-2.0f" />...

FormatterLineAggregator<CustomerCredit> lineAggregator = new FormatterLineAggregator<>();

lineAggregator.setFormat("%-9s%-2.0f");

...The underlying implementation is built using the same Formatter added as part of Java 5. The Java Formatter is based on the printf functionality of the C programming language. Most details on how to configure a formatter can be found in the Javadoc of Formatter .

It is also possible to use the FlatFileItemWriterBuilder.FormattedBuilder to automatically create the BeanWrapperFieldExtractor and FormatterLineAggregator as shown in following example:

@Bean

public FlatFileItemWriter<CustomerCredit> itemWriter(Resource outputResource) throws Exception {

return new FlatFileItemWriterBuilder<CustomerCredit>()

.name("customerCreditWriter")

.resource(outputResource)

.formatted()

.format("%-9s%-2.0f")

.names(new String[] {"name", "credit"})

.build();

}Handling File Creation

FlatFileItemReader has a very simple relationship with file resources. When the reader is initialized, it opens the file (if it exists), and throws an exception if it does not. File writing isn’t quite so simple. At first glance, it seems like a similar straightforward contract should exist for FlatFileItemWriter: If the file already exists, throw an exception, and, if it does not, create it and start writing. However, potentially restarting a Job can cause issues. In normal restart scenarios, the contract is reversed: If the file exists, start writing to it from the last known good position, and, if it does not, throw an exception. However, what happens if the file name for this job is always the same? In this case, you would want to delete the file if it exists, unless it’s a restart. Because of this possibility, the FlatFileItemWriter contains the property, shouldDeleteIfExists. Setting this property to true causes an existing file with the same name to be deleted when the writer is opened.

1.7. XML Item Readers and Writers

Spring Batch provides transactional infrastructure for both reading XML records and mapping them to Java objects as well as writing Java objects as XML records.

|

Constraints on streaming XML

The StAX API is used for I/O, as other standard XML parsing APIs do not fit batch processing requirements (DOM loads the whole input into memory at once and SAX controls the parsing process by allowing the user to provide only callbacks). |

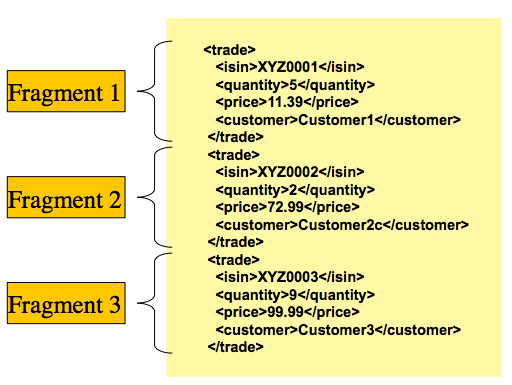

We need to consider how XML input and output works in Spring Batch. First, there are a few concepts that vary from file reading and writing but are common across Spring Batch XML processing. With XML processing, instead of lines of records (FieldSet instances) that need to be tokenized, it is assumed an XML resource is a collection of 'fragments' corresponding to individual records, as shown in the following image:

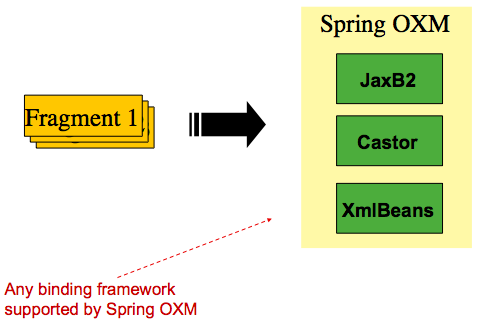

The 'trade' tag is defined as the 'root element' in the scenario above. Everything between '<trade>' and '</trade>' is considered one 'fragment'. Spring Batch uses Object/XML Mapping (OXM) to bind fragments to objects. However, Spring Batch is not tied to any particular XML binding technology. Typical use is to delegate to Spring OXM , which provides uniform abstraction for the most popular OXM technologies. The dependency on Spring OXM is optional and you can choose to implement Spring Batch specific interfaces if desired. The relationship to the technologies that OXM supports is shown in the following image:

With an introduction to OXM and how one can use XML fragments to represent records, we can now more closely examine readers and writers.

1.7.1. StaxEventItemReader

The StaxEventItemReader configuration provides a typical setup for the processing of records from an XML input stream. First, consider the following set of XML records that the StaxEventItemReader can process:

<?xml version="1.0" encoding="UTF-8"?>

<records>

<trade xmlns="https://springframework.org/batch/sample/io/oxm/domain">

<isin>XYZ0001</isin>

<quantity>5</quantity>

<price>11.39</price>

<customer>Customer1</customer>

</trade>

<trade xmlns="https://springframework.org/batch/sample/io/oxm/domain">

<isin>XYZ0002</isin>

<quantity>2</quantity>

<price>72.99</price>

<customer>Customer2c</customer>

</trade>

<trade xmlns="https://springframework.org/batch/sample/io/oxm/domain">

<isin>XYZ0003</isin>

<quantity>9</quantity>

<price>99.99</price>

<customer>Customer3</customer>

</trade>

</records>To be able to process the XML records, the following is needed:

Root Element Name: The name of the root element of the fragment that constitutes the object to be mapped. The example configuration demonstrates this with the value of trade.

Resource: A Spring Resource that represents the file to read.

Unmarshaller: An unmarshalling facility provided by Spring OXM for mapping the XML fragment to an object.

The following example shows how to define a StaxEventItemReader that works with a root element named trade, a resource of org/springframework/batch/item/xml/domain/trades.xml, and an unmarshaller called tradeMarshaller.

<bean id="itemReader" class="org.springframework.batch.item.xml.StaxEventItemReader">

<property name="fragmentRootElementName" value="trade" />

<property name="resource" value="org/springframework/batch/item/xml/domain/trades.xml" />

<property name="unmarshaller" ref="tradeMarshaller" />

</bean>@Bean

public StaxEventItemReader itemReader() {

return new StaxEventItemReaderBuilder<Trade>()

.name("itemReader")

.resource(new FileSystemResource("org/springframework/batch/item/xml/domain/trades.xml"))

.addFragmentRootElements("trade")

.unmarshaller(tradeMarshaller())

.build();

}Note that, in this example, we have chosen to use an XStreamMarshaller, which accepts an alias passed in as a map with the first key and value being the name of the fragment (that is, a root element) and the object type to bind. Then, similar to a FieldSet, the names of the other elements that map to fields within the object type are described as key/value pairs in the map. In the configuration file, we can use a Spring configuration utility to describe the required alias, as follows:

<bean id="tradeMarshaller"

class="org.springframework.oxm.xstream.XStreamMarshaller">

<property name="aliases">

<util:map id="aliases">

<entry key="trade"

value="org.springframework.batch.sample.domain.trade.Trade" />

<entry key="price" value="java.math.BigDecimal" />

<entry key="isin" value="java.lang.String" />

<entry key="customer" value="java.lang.String" />

<entry key="quantity" value="java.lang.Long" />

</util:map>

</property>

</bean>@Bean

public XStreamMarshaller tradeMarshaller() {

Map<String, Class> aliases = new HashMap<>();

aliases.put("trade", Trade.class);

aliases.put("price", BigDecimal.class);

aliases.put("isin", String.class);

aliases.put("customer", String.class);

aliases.put("quantity", Long.class);

XStreamMarshaller marshaller = new XStreamMarshaller();

marshaller.setAliases(aliases);

return marshaller;

}On input, the reader reads the XML resource until it recognizes that a new fragment is about to start. By default, the reader matches the element name to recognize that a new fragment is about to start. The reader creates a standalone XML document from the fragment and passes the document to a deserializer (typically a wrapper around a Spring OXM Unmarshaller) to map the XML to a Java object.

In summary, this procedure is analogous to the following Java code, which uses the injection provided by the Spring configuration:

StaxEventItemReader<Trade> xmlStaxEventItemReader = new StaxEventItemReader<>();

Resource resource = new ByteArrayResource(xmlResource.getBytes());

Map aliases = new HashMap();

aliases.put("trade","org.springframework.batch.sample.domain.trade.Trade");

aliases.put("price","java.math.BigDecimal");

aliases.put("customer","java.lang.String");

aliases.put("isin","java.lang.String");

aliases.put("quantity","java.lang.Long");

XStreamMarshaller unmarshaller = new XStreamMarshaller();

unmarshaller.setAliases(aliases);

xmlStaxEventItemReader.setUnmarshaller(unmarshaller);

xmlStaxEventItemReader.setResource(resource);

xmlStaxEventItemReader.setFragmentRootElementName("trade");

xmlStaxEventItemReader.open(new ExecutionContext());

boolean hasNext = true;

Trade trade = null;

while (hasNext) {

trade = xmlStaxEventItemReader.read();

if (trade == null) {

hasNext = false;

}

else {

System.out.println(trade);

}

}1.7.2. StaxEventItemWriter

Output works symmetrically to input. The StaxEventItemWriter needs a Resource, a marshaller, and a rootTagName. A Java object is passed to a marshaller (typically a standard Spring OXM Marshaller) which writes to a Resource by using a custom event writer that filters the StartDocument and EndDocument events produced for each fragment by the OXM tools. The following example uses the StaxEventItemWriter:

<bean id="itemWriter" class="org.springframework.batch.item.xml.StaxEventItemWriter">

<property name="resource" ref="outputResource" />

<property name="marshaller" ref="tradeMarshaller" />

<property name="rootTagName" value="trade" />

<property name="overwriteOutput" value="true" />

</bean>@Bean

public StaxEventItemWriter itemWriter(Resource outputResource) {

return new StaxEventItemWriterBuilder<Trade>()

.name("tradesWriter")

.marshaller(tradeMarshaller())

.resource(outputResource)

.rootTagName("trade")

.overwriteOutput(true)

.build();

}The preceding configuration sets up the three required properties and sets the optional overwriteOutput=true attribute, mentioned earlier in this chapter for specifying whether an existing file can be overwritten. It should be noted the marshaller used for the writer in the following example is the exact same as the one used in the reading example from earlier in the chapter:

<bean id="customerCreditMarshaller"

class="org.springframework.oxm.xstream.XStreamMarshaller">

<property name="aliases">

<util:map id="aliases">

<entry key="customer"

value="org.springframework.batch.sample.domain.trade.Trade" />

<entry key="price" value="java.math.BigDecimal" />

<entry key="isin" value="java.lang.String" />

<entry key="customer" value="java.lang.String" />

<entry key="quantity" value="java.lang.Long" />

</util:map>

</property>

</bean>@Bean

public XStreamMarshaller customerCreditMarshaller() {

XStreamMarshaller marshaller = new XStreamMarshaller();

Map<String, Class> aliases = new HashMap<>();

aliases.put("trade", Trade.class);

aliases.put("price", BigDecimal.class);

aliases.put("isin", String.class);

aliases.put("customer", String.class);

aliases.put("quantity", Long.class);

marshaller.setAliases(aliases);

return marshaller;

}To summarize with a Java example, the following code illustrates all of the points discussed, demonstrating the programmatic setup of the required properties:

FileSystemResource resource = new FileSystemResource("data/outputFile.xml")

Map aliases = new HashMap();

aliases.put("trade","org.springframework.batch.sample.domain.trade.Trade");

aliases.put("price","java.math.BigDecimal");

aliases.put("customer","java.lang.String");

aliases.put("isin","java.lang.String");

aliases.put("quantity","java.lang.Long");

Marshaller marshaller = new XStreamMarshaller();

marshaller.setAliases(aliases);

StaxEventItemWriter staxItemWriter =

new StaxEventItemWriterBuilder<Trade>()

.name("tradesWriter")

.marshaller(marshaller)

.resource(resource)

.rootTagName("trade")

.overwriteOutput(true)

.build();

staxItemWriter.afterPropertiesSet();

ExecutionContext executionContext = new ExecutionContext();

staxItemWriter.open(executionContext);

Trade trade = new Trade();

trade.setPrice(11.39);

trade.setIsin("XYZ0001");

trade.setQuantity(5L);

trade.setCustomer("Customer1");

staxItemWriter.write(trade);